The following is a simple implementation of the algorithm in Octave. Begin by choosing some value μ 0 įrom which the cubic convergence is evident. Now, in the chapter 8 of the book A First Course in Numerical Methods (about Eigenvalues and Singular Values), at page 228 (at least in this version that I've), there's the following inverse iteration method: Main Questions. The algorithm is very similar to inverse iteration, but replaces the estimated eigenvalue at the end of each iteration with the Rayleigh quotient. The Rayleigh quotient iteration algorithm converges cubically for Hermitian or symmetric matrices, given an initial vector that is sufficiently close to an eigenvector of the matrix that is being analyzed. MATLAB m-file for Inverse Power Method for iterative approximation of eigenvalue closest to 0 (in modulus) ShiftInvPowerMethod.m: MATLAB m-file for Shifted Inverse Power Method for iterative approximation of eigenvalues descent.m: MATLAB script to experiment with descent methods for solving Axb descent1.m: MATLAB m-file for generic descent.

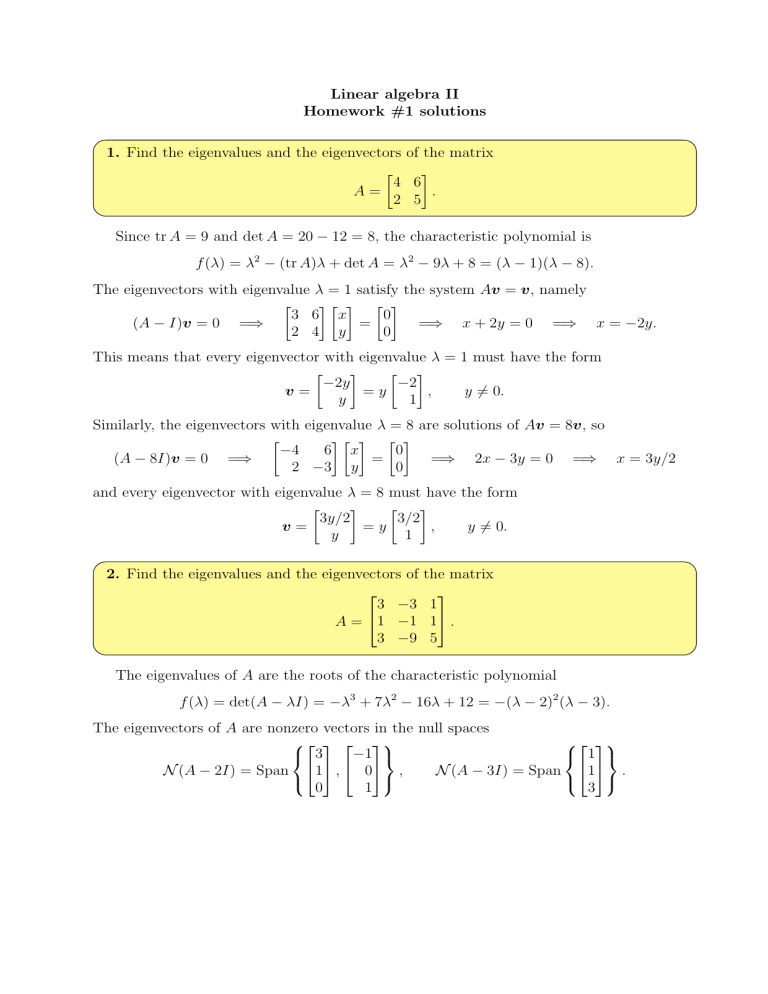

Very rapid convergence is guaranteed and no more than a few iterations are needed in practice to obtain a reasonable approximation. Rayleigh quotient iteration is an iterative method, that is, it delivers a sequence of approximate solutions that converges to a true solution in the limit. Discussion of Eigenvalues & Eigenvectors, Power Method, Inverse Power Method, and the Rayleigh Quotient with brief overview of Rayleigh Quotient Iteration. Every eigenvalue corresponds to an eigenvector. For i 0 1 2 ::: Compute v i+1 (A I) 1u iand k i+1. 0 Algorithm 3 (Inverse power method with a xed shift) Choose an initial u 0 6 0. Taiwan Normal Univ.) Power and inverse power methods Febru12 / 17. Then the values X, satisfying the equation are eigenvectors and eigenvalues of matrix A respectively. The inverse power method is simply the power method applied to (A I) 1. Let is an NN matrix, X be a vector of size N1 and be a scalar. For a symmetric matrix A, upon convergence, AQ = QΛ, where Λ is the diagonal matrix of eigenvalues to which A converged, and where Q is a composite of all the orthogonal similarity transforms required to get there.Rayleigh quotient iteration is an eigenvalue algorithm which extends the idea of the inverse iteration by using the Rayleigh quotient to obtain increasingly accurate eigenvalue estimates. Eigenvalues and Eigenvectors are properties of a square matrix. Instead, the QR algorithm works with a complete basis of vectors, using QR decomposition to renormalize (and orthogonalize). Use the method of inverse iteration to find the eigenvalue of the matrix of Example 11.3 nearest to 4. Are you looking for the largest eigenvalue or the eigenvalue with the largest magnitude For magnitude, arand(1000) max(abs(eig(a))) is much slower especially if you want to repeat it multiple times because it will compute all of the eigenvalues and then pick the max. The vector converges to an eigenvector of the largest eigenvalue.

Recall that the power algorithm repeatedly multiplies A times a single vector, normalizing after each iteration. The QR algorithm can be seen as a more sophisticated variation of the basic "power" eigenvalue algorithm.

Golub and Van Loan use the term Francis QR step. Rather than taking Xk as our approximate eigenvector, it is natural to ask for the 'best' approximate eigenvector in 1Ck, i.e., the best linear combination Ek1 i.

, Ak-1x1, and in the case of inverse iteration this Krylov subspace is Kk((A - UI), xl). Since in the modern implicit version of the procedure no QR decompositions are explicitly performed, some authors, for instance Watkins, suggested changing its name to Francis algorithm. method, this Krylov subspace is 1Ck(A, xl) spanxl, Axl, A2x1. A k + 1 = R k Q k = Q k − 1 Q k R k Q k = Q k − 1 A k Q k = Q k T A k Q k, is sufficiently small. Inverse Iteration is the Power Method applied to (A I) 1.

0 kommentar(er)

0 kommentar(er)